Python Concurrency: Threads, Processes, and asyncio Explained

Contents

Intro

I recently needed to learn Python concurrency. I always thought I’d get to that topic someday. That someday arrived.

The best resource I’ve found is David Beazley’s Python Concurrency From the Ground Up: LIVE!. Not only does he do live coding without mistakes, but he explains it very intuitively. This post is a summary of his talk, plus a section on asyncio (wasn’t released at the time).

The goal is to understand the differences between threads, processes, and asyncio.

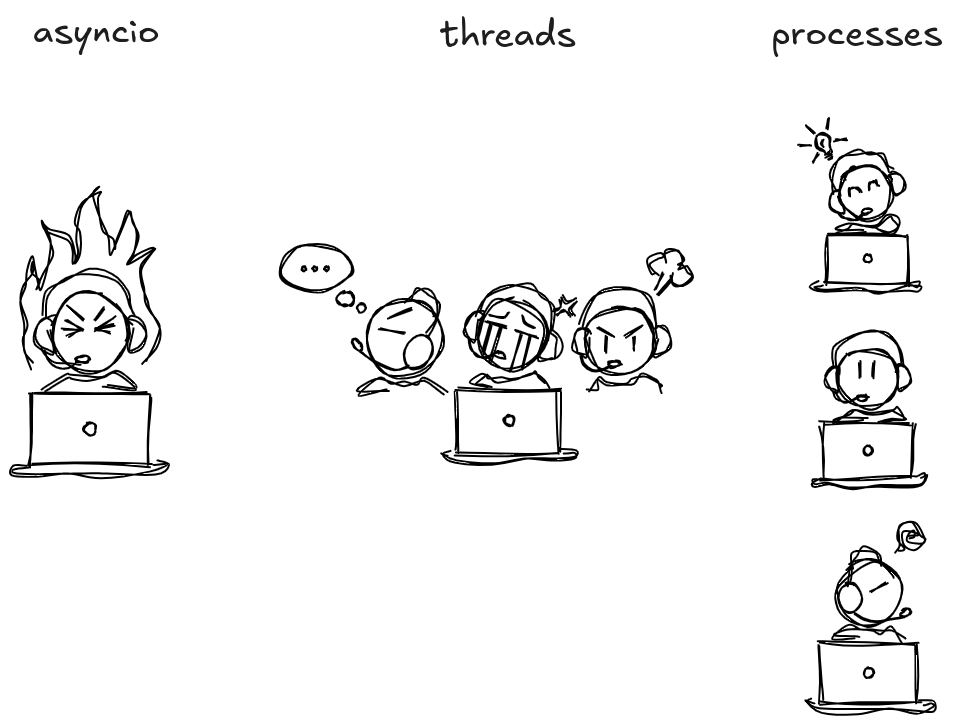

To understand it at a high level, I like this analogy:

- threads are like having many workers share one computer

- processes are like having many workers, each with their own computer

- asyncio is like having one well organized worker who knows when to switch between different tasks

We’ll explore each one, see what they’re good at, and figure out which you should use for your situation.

First, we need to define concurrency:

Concurrency in programming means that multiple computations happen at the same time.

Setup

To get started, we’re going to need two things:

- First, a CPU-intensive function that helps us see concurrency in action

- Second, a simple server where we can experiment with different approaches

For the first item, we are using the Fibonacci sequence . It’s familiar and it perfectly shows our concurrency challenges. When you’re computing fib(50), you’ll appreciate why it matters.

def fib(n): if n <=2: return 1 else: return fib(n-1) + fib(n-2)Next, Dave uses socket programming to create a web server. This is helpful for seeing the effect of multiple calls to a CPU-bound task.

from socket import *from fib import fib

def fib_server(address): sock = socket(AF_INET, SOCK_STREAM) sock.setsockopt(SOL_SOCKET, SO_REUSEADDR, 1) sock.bind(address) sock.listen(5) while True: client, addr = sock.accept() print("Connection", addr) fib_handler(client)

def fib_handler(client): while True: req = client.recv(100) if not req: break result = fib(int(req)) resp = str(result).encode("ascii") + b"\n" client.send(resp)

fib_server(("", 25000))What’s happening here:

- A server is created which listens continuously for a connection

- For each connection, it calls

fib_handler - Handles only one client at a time (The call to

fib_handler) runs in an infinite loop)

When you run this program, python server.py, you can open a connection to the server in order to start sending it requests:

telnet localhost 25000Now, you can input any number and the server will give you the result if fib(n). But as we mentioned earlier, it can only handle one connection at a time. You can test this by running telnet localhost 25000 in another terminal, type any number, and see that it doesn’t return a result.

Now that we understand the basic server setup and its limitations, let’s see how threads can help us solve this problem.

Threads

We can use threads to help us handle multiple connections simultaneously.

from socket import *from fib import fibfrom threading import Thread

def fib_server(address): sock = socket(AF_INET, SOCK_STREAM) sock.setsockopt(SOL_SOCKET, SO_REUSEADDR, 1) sock.bind(address) sock.listen(5) while True: client, addr = sock.accept() print("Connection", addr) Thread(target=fib_handler, args=(client,)).start()

def fib_handler(client): while True: req = client.recv(100) if not req: break result = fib(int(req)) resp = str(result).encode("ascii") + b"\n" client.send(resp)

fib_server(("", 25000))You can check that this works by opening a connection (and inputting numbers) in 2 or more terminals:

telnet localhost 25000Why does this work? We’re offloading each client connection to its own thread.

Now let’s stress-test our server! The following are performance scripts from Dave’s talk.

# Time of a long running request

from socket import *import time

sock = socket(AF_INET, SOCK_STREAM)sock.connect(("localhost", 25000))

while True: start = time.time() sock.send(b"30") resp = sock.recv(100) end = time.time() print(end - start)This script, perf1.py, simulates a long running request (running fib(30)). If we run this python perf1.py in one terminal, we might see something like:

> python perf1.py

0.171969652175903320.173807859420776370.166598320007324220.18915700912475586...This shows us how long each request takes. Now if we keep this running, and open another terminal window to run it a second time, we see something like this:

> python perf1.py

0.365380048751831050.29563570022583010.2884826660156250.3098731040954590.2971019744873047In both terminal windows, the time it takes to run the fib(30) has doubled. We see that the runtime increases linearly with the number of threads.

Now, Dave asks us an interesting question: ‘What happens if we mix a long running request with very short running requets?‘

We can simulate short running requests with this script:

# requests/sec of fast requests

from socket import *import time

sock = socket(AF_INET, SOCK_STREAM)sock.connect(("localhost", 25000))

n = 0

from threading import Thread

def monitor(): global n while True: time.sleep(1) print(n, "reqs/sec") n = 0

Thread(target=monitor).start()

while True: sock.send(b"1") resp = sock.recv(100) n += 1This script sends fib(1) which is a very fast computation compared to perf1.py’s fib(30).

Now let’s run perf2.py followed by perf1.py

> python perf2.py

66683 reqs/sec70768 reqs/sec59795 reqs/sec> python perf1.py

0.171069145202636720.14910721778869630.14998102188110352If we take a look back at our terminal with perf2.py, we can see a significant drop in reqs/sec.

> python perf2.py

66683 reqs/sec70768 reqs/sec59795 reqs/sec...71 reqs/sec126 reqs/sec108 reqs/secOur fast running performance script decreases by ~600 times! But the long running request still takes the same amount of time. What’s happening here?

Here’s where we run into the infamous global interpreter lock (GIL). The GIL prevents multiple threads from executing Python bytecode at the same time. So what happens if you have a long running request in one thread and very short running requests in another thread? As we saw in our experiment above, the thread with the long running request will be prioritized!

We can also see here that we do not have control over when a task switch occurs. We’ll see later how that contrasts with asyncio which allows you to control when task switches happen.

This is why you may have heard Python developers saying you shouldn’t use threads. Many have been burned by scenarios where an app has grinded to a halt because of a long running request in a thread.

While threads helped us with concurrent connections, the GIL was a significant bottleneck. Our next approach uses processes to parallelize the work.

Processes

Processes can sidestep the GIL by using subprocesses instead of threads. Let’s take a look at how we can implement this in our server:

from socket import *from fib import fibfrom threading import Threadfrom concurrent.futures import ProcessPoolExecutor as Pool

pool = Pool(4)

def fib_server(address): sock = socket(AF_INET, SOCK_STREAM) sock.setsockopt(SOL_SOCKET, SO_REUSEADDR, 1) sock.bind(address) sock.listen(5) while True: client, addr = sock.accept() print("Connection", addr) Thread(target=fib_handler, args=(client,), daemon=True).start()

def fib_handler(client): while True: req = client.recv(100) if not req: break n = int(req) future = pool.submit(fib, n) result = future.result() resp = str(result).encode("ascii") + b"\n" client.send(resp) print("Closed")

fib_server(("", 25000))We’re using the module from concurrent.futures since it offers a higher level abstraction and some niceties.

Now let’s run both perf1.py and perf2.py again and see what happens.

> python perf2.py

3493 reqs/sec3527 reqs/sec3844 reqs/sec> python perf1.py

0.27278041839599610.199180364608764650.2786672115325928And if we go back to perf2.py’s terminal, let’s see what happens to it after running perf1.py.

> python perf2.py

3493 reqs/sec3527 reqs/sec3844 reqs/sec...3087 reqs/sec2785 reqs/sec3090 reqs/secWe can make a few observations here:

- Both perfromance scripts seemed a bit slower when run in processes. This is because running in processes has an additional overhead compared to threads.

- Running both scripts at the same time did not significantly reduce the

reqs/sec. This is because running in processes allows the scripts to sidestep the GIL.

Processes have solved the CPU-intensive concurrency issues. But they have overhead costs. For non CPU-intensive tasks, there’s another approach that doesn’t have the overhead of threads or processes.

Asynchronous Programming

At this point, Dave introduces asynchronous programming. With threads, we can’t control when each thread becomes active or inactive. The OS controls that. But with this asynchronous programming pattern, we can control that point where the switch happens.

This means that you do not have to use locks since you control when task switches occur. The cost to task switching is also very low.

He demonstrates this by using yield to create a coroutine. You can take a look at his example here.

This is where I’ll take a bit of a detour from the video, and try to do something similar using asyncio. asyncio allows you to write concurrent code using the async/await syntax, and maintain full control over the execution of coroutines.

Let’s implement this in a simple way:

import asynciofrom socket import *from fib import fib

async def fib_server(address): sock = socket(AF_INET, SOCK_STREAM) sock.setsockopt(SOL_SOCKET, SO_REUSEADDR, 1) sock.bind(address) sock.listen(5) sock.setblocking(False)

loop = asyncio.get_event_loop()

while True: client, addr = await loop.sock_accept(sock) print("Connection", addr) loop.create_task(fib_handler(client, loop))

async def fib_handler(client, loop): while True: req = await loop.sock_recv(client, 100) if not req: break result = fib(int(req)) resp = str(result).encode("ascii") + b"\n" await loop.sock_sendall(client, resp) client.close()

if __name__ == "__main__": asyncio.run(fib_server(("", 25000)))Note: Typically you would use asyncio’s server setup rather than socket programming. But since we want to focus on asynchronous programming, we’ll keep the raw socket operations so we can see the comparison between the different approaches.

What’s happening here?

- Event loop:

asyncio.get_event_loop()is the core of asyncio’s concurrency model. Event loops run asynchronous tasks and callbacks. - Listening for connections:

await loop.socket_accept(sock)means that even if the server is waiting for a connection, the loop can manage other tasks concurrently. - Handling connections:

loop.create_task(fib_handler(client, loop))schedules thefib_handlercoroutine, running separately of the main server loop - Fib handler:

await loop.sock_recv(client, 100)is a non-blocking call that waits for dataawait loop.sock_sendall(client, resp)sends back the result offibto the client, which is also non-blocking- If no data is received, the loop breaks and the client socket is closed

A major difference between asyncio and threads is that asyncio handles many concurrent connections with coroutine tasks. It is a single threaded with an event loop. This means that asyncio has less overhead, and is better suited for managing many more concurrent connections since OS threads are expensive.

Now that we’ve explored the 3 different approaches to python concurrency, let’s summarize when each approach makes sense to use.

Summary

This is a bit simplistic, but here’s my take away for deciding when to use each:

if cpu_intensive: 'processes'else: if suited_for_threads: 'threads' elif suited_for_asyncio: 'asyncio'If your tasks are CPU-bound and intensive, then you don’t want any blocking to happen. Processes are ideal for this.

But what about threads vs asyncio? In what situation would you use each?

Let’s summarize what we explored above (this discussion is also helpful, with comments from CPython core developers)

Advantages of asyncio:

- lower overhead: runs on a single thread, so you can reasonably have many (millions?) concurrent tasks

- visible schedule points: using

awaitmakes it clear. This helps with reasoning about data races and debugging - Tasks support cancellation

Disadvantages of asyncio:

- Limited third-party support. Since it’s not possible to call async functions from normal functions, you may have difficulty with external libs. You need a non-blocking version of whatever you want to do with async programming.

- More complicated if developers aren’t familiar with event loops and coroutines

Advantages of threads:

- Ease of integration, works well with existing code

- Requires very little tooling (just locks and queues)

Disadvantages of threads:

- Overhead: more memory and processing overhead than asyncio

- Complexity of thread safety: more difficult to debug race conditions, locks, …

When should you use asyncio?

- when your tasks are mainly I/O bound (eg. network requests, socket connections)

- when you want to efficiently manage many concurrent tasks

When should you use threads?

- when working with existing systems where it’s easier to continue using threads

- when working with core third party libs that are thread-optimized

Understanding the strengths of limitations of threads, processes, and asyncio will help us choose the right tool to improve performance and efficiency.

Resources

- David Beazley - Python Concurrency From the Ground Up: LIVE! - PyCon 2015 - YouTube

- GitHub - dabeaz/concurrencylive: Code from Concurrency Live - PyCon 2015

- threading — Thread-based parallelism — Python documentation

- multiprocessing — Process-based parallelism — Python documentation

- asyncio — Asynchronous I/O — Python documentation

- What are the advantages of asyncio over threads? Discussions on Python.org

- Concurrency - Python Wiki

- Raymond Hettinger, Keynote on Concurrency, PyBay 2017 - YouTube

- Great talk about how to use threads safely and reduce risk of race conditions

Have some thoughts on this post? Reply with an email.