Combining vector and lexical search

I was recently developing a RAG (retrieval augmented generation) app, using vector search via embeddings generated from OpenAI and pgvector. It worked quite well but there were some areas where I wasn’t as satisfied with the search result. Could we improve the search process with more traditional methods?

I found that using a hybrid search approach, with a lexical method like BM25, does improve the search result.

Someone recommended a useful paper, BERT-based Dense Retrievers Require Interpolation with BM25 for Effective Passage Retrieval, which looked at interpolating BM25 and BERT-based dense retrievers. I’ve also seen other anecdotes that combining vector and lexical search yields better performance than either alone.

The paper suggested that there are significant gains when using both approaches. Their explanation is that dense retrievers are very effective at encoding strong relevance signals, but they fail in identifying weaker relevance signals – a task that the interpolation with BM25 is able to make up for.

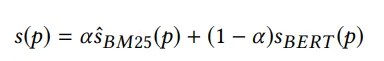

The interpolation looks like this:

It takes a weighted sum of the BM25 and BERT scores to provide a final relevance score s(p) for each passage (p). The weight α (between 0 and 1) determines the balance between the two methods. When α is closer to 1, more importance is given to the BM25 score. When α is closer to 0, the BERT score is given more weight. The optimal value of α depends on the data and testing with the validation set.

It takes a weighted sum of the BM25 and BERT scores to provide a final relevance score s(p) for each passage (p). The weight α (between 0 and 1) determines the balance between the two methods. When α is closer to 1, more importance is given to the BM25 score. When α is closer to 0, the BERT score is given more weight. The optimal value of α depends on the data and testing with the validation set.

There’s a simple library that can help you use BM25, rank_bm25. I like it because it gives a couple of different algorithms, and links to a paper that compares each one. It also provides scores for each query.

An alternative approach could use OpenSearch but that will require more setup. I went with rank_bm25 since it was relatively more simple to use. You’ll have to experiment with yourself with the different approaches and interpolation.

Have some thoughts on this post? Reply with an email.